Quality improvement (QI) is an ongoing and evolving process. QI activities consist of monitoring and reporting outcomes and results, using

- Instructional coding manuals

- Standard operating procedures

- Data edits

- Data quality and completeness audits

- Special studies

- Education and training activities

SEER QI activities are goal-oriented, protocol-driven, and results-centric. Formal protocols outline a detailed study plan that includes a plan for statistical analysis. Activities include setting standards for data collection by cancer registries and providing technical, instructional coding manuals, as well as scientific resource manuals.

SEER provides training and educational materials that are helpful to cancer registrars seeking credentialing. SEER also leads workshops that provide advanced training in data collection and coding.

Both quantitative and qualitative results from studies are tied directly into the planning and development of targeted education and training materials and programs.

High Quality Data

The SEER Program is viewed as the standard for quality among cancer registries around the world. Each SEER Program registry has a contractual obligation to meet specifically defined data quality goals on an ongoing basis.

The SEER Program has also developed an extensive set of field edits that prevent and correct errors in the data. Electronic edits provide the means to authenticate codes, check for missing data, and check for interrelated data item errors.

Collaborative efforts with national committees and national data standards contribute to high data quality.

Quality Improvement Experts (QIE)

In addition to the expert Quality Improvement (QI) staff located in the main office at NCI, the SEER Program also utilizes expert QI staff residents in each of the SEER central registries and outside contractors, all under the direction of the NCI SEER QI Manager. This cadre of staff is referred to as the Quality Improvement Experts (QIE) group. Statisticians and other experts are involved as needed.

With representation from all SEER registries, the QIE group is the “key” to many quality improvement activities. Working with NCI SEER staff, the QIE group’s goals are to

- Improve communication and ensure engagement of registry experts in planning and implementation of the SEER Program’s quality improvement activities

- Focus on the quality of outcomes (data sets) and processes (central registry operations)

- Conduct protocol-based evaluations of data sets and operations

- Engage and maximize the capacity of this valuable resource of experts

Quality Improvement = Quality Assurance + Quality Control

Under the umbrella of quality improvement, NCI SEER includes two main components: Quality Assurance (QA) and Quality Control (QC).

Quality Assurance

Quality Assurance activities include those that take place during data collection, before the data are submitted from the registries to NCI SEER. For example, training and education of data collection experts fall under quality assurance. Instructional coding manuals, standard operating procedures, and data edits are also examples of QA activities.

Quality Control

Quality Control activities include those that take place after the data have been submitted from the registries to NCI SEER. Data quality and completeness audits, special studies, and benchmarking are examples of QC activities.

Quality Audit Plans (QAPs)

QAPs are broad quality assessments of data items which assess data quality in terms of completeness, consistency, outliers, and trends. Conducting QAPs informs NCI SEER and SEER registries about changes over time and data quality differences by registry. The QAPs can connect findings to changes in coding instructions and explore research implications.

The QAP is a guideline developed for a specific data element or classes of data elements to: 1) assess the quality of SEER data elements, 2) verify that underlying data acquisition (e.g., central registry operations) and utilization (e.g., research) are aligned with the scope/limitations of the data elements, and 3) identify corrective action plans (CAP) recommendations if an underlying source of data errors has been identified.

Purpose of a Quality Audit Plan

- Systematize ongoing quality activities within SEER

- Develop methods to proactively address the changing data landscape required for cancer surveillance

- Provide a formal method for prioritization of existing and future quality efforts

- Using a standardized method, identify ways to improve data quality and provide better population-based information to users

- Provide more consistent communication to registries and data users with respect to quality efforts within SEER

- Develop a process to know when and how unreleased data could be released

Full Focused Audits

Focused Audits are in-depth evaluations which provide a comprehensive and detailed analysis of specific data items of interest and related data items. Focused audits use multiple methods of review and analyses including registry case reviews, development of gold standards, and natural language processing methods. Results from focused audits can identify new training opportunities, expand methods of evaluation, and inform corrective action plans for a data item.

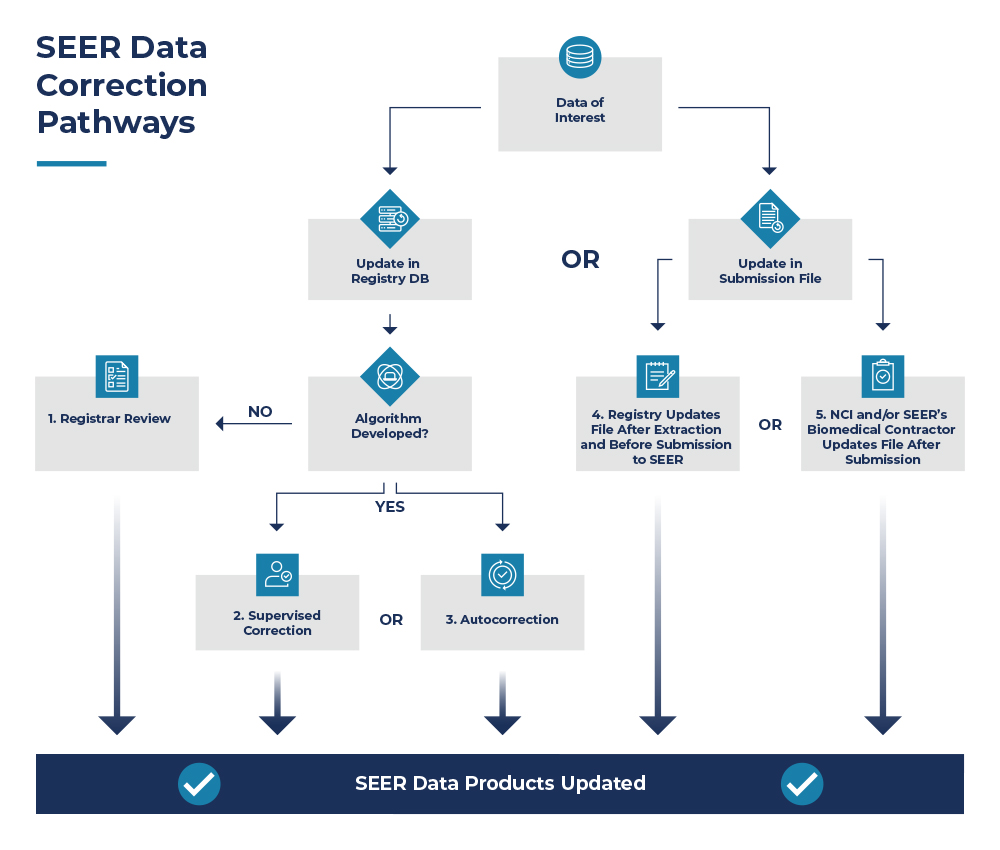

This graphic demonstrates the corrective actions possible after an initial audit has been conducted, and results have been shared with the SEER registries. Ultimately there are five possible routes SEER recommends enhancing data accuracy once an issue has been identified.

Three options are available if a registry wishes to make corrective actions directly in their database (#1, #2, #3) prior to submitting to NCI SEER, described below.

| Option | Description |

|---|---|

| 1. Registrar Review | Registrar manually reviewing and correcting discrepant cases provided by SEER’s Biomedical Computing Contractor, typically through SEER*DMS. |

| 2. Supervised Correction | Registrar manually reviewing a case or case values flagged by an algorithm to be potentially incorrect. Registrar will compare originally coded value to the coded value identified by the algorithm and determine the correct value. |

| 3. Autocorrection | Using an algorithm to apply logic to the data based on incompatibility of values of two or more data items. The case is not reviewed by a registrar and autocorrection is only effective if the performance of the algorithm is high. |

The remaining two options are available if a registry is not able to make changes in their database. In this situation, they may opt to make changes to the data file they plan to submit to NCI SEER (#4) or have NCI and/or SEER’s Biomedical Computing Contractor make changes to the data file they submit to NCI SEER (#5).

Examples

- Registrar Review

SSF-7/Grade for Breast - Supervised Correction

Circumferential Resection Margin (CRM)

Melanoma Tumor Depth - Autocorrection

Pathological Grade for Bladder - Registry Update File After Extraction and Before Submission to SEER

To date, registries have not elected this option. - NCI and/or SEER’s Biomedical Computing Contractor Update File After Submission

SSF-7/Grade for Breast

Brief Audit Descriptions

Circumferential Resection Margin (CRM)

NCI conducted a preliminary data analysis on CRM in colon and rectal cancer cases. Results showed data quality issues related to discrepancies between CRM data items and surgery data items: a total of six problem areas were identified. Due to the significance, the full focused audit is being conducted in rectal cancer cases only. A portion of the rectal cancer cases identified as having errors were corrected though manual review by the registrars, and NLP methods are being used for developing data abstraction tools for the remaining errors.

Melanoma Tumor Depth

Various SEER registries communicated issues regarding the coding of melanoma tumor depth. Decimal errors, transcription errors, incomplete information and miscoding of tumor size for tumor depth were identified after internal reviews were conducted. An algorithm was developed to identify accurate melanoma depth measurement values. After conducting testing and validation, it was recommended to use this algorithm as a tool for supervised error correction. This algorithm was released in August 2022. Incoming cases are “flagged” if the coded value for melanoma tumor depth differs from the algorithm-selected value. Registrars must review these flagged cases and either override the flag or make corrections as needed.

Pathological Grade for Bladder

NCI conducted an analysis examining the data quality of pathological grade for bladder cases diagnosed 2018-2020 when a TURB (Transurethral resection of the bladder) was performed. Over 7,000 cases (11% of bladder cases) were coded incorrectly according to the instructions provided in the Grade Manual. NCI suspected that registrars were coding pathological grade based on the pathology report for the TURB; however, the Grade Manual states that a TURB does not qualify for pathological grade, and when cystectomy is not performed the grade must be coded to 9. A review of sample cases by registrars confirmed this. An autocorrect of these data was recommended. When surgery of primary site is not a cystectomy, pathological grade must be 9.

In addition to reviewing grade, the coding of muscle invasion was reviewed. It was determined that registrars were coding specific muscle invasion (superficial vs deep) when only a TURB was done. It was confirmed with AJCC that a cystectomy is needed to determine whether there is superficial or deep muscle invasion. If a TURB is done, then muscle invasion, NOS should be coded. Instructions were updated in the manual, edits developed, and cases from 2018 forward were autocorrected.

Prostate Specific Antigen (PSA)

A routine quality assurance study by registrars who abstracted standardized cases identified concerns regarding the quality of the Prostate Specific Antigen (PSA) value in all surveillance data, including SEER. The SEER Program completed a comprehensive review of PSA consolidated values in comparison to text documentation from abstract records across all registries. The meaningful error rate for PSA coding in 2012 SEER data was much lower than expected, with an error rate of 5.70% overall. The SEER registries reviewed and corrected PSA values from 2004-2014 and the data were re-released in the public use file in April 2017.

SSF-7/Grade for Breast

Over 60,000 breast cancer cases diagnosed between 2000 and 2017 had coding discrepancies for Grade (based on 2014 instructions). The mismatches were between Site-Specific Factor 7 (Nottingham/Bloom Richardson Grade) and the recorded Grade. Autocorrections were recommended to address these discrepancies. After some discussion, most registries chose to manually review and correct cases. A few registries gave their approval for cases to be autocorrected in the public use file or on SEER*DMS (Option #5). The autocorrect assigns both SSF-7 and Grade a value of 9 when they don’t match. This change took effect with the April 2023 data release.

NCI SEER Guidance on Selecting Sample Size for Registry Quality Studies

NCI has taken different possible approaches to determine how many cases should be evaluated.

- What is feasible from the perspective of registries/registrars (i.e., considering workload, time, resources, etc.).

- Typically, we suggest 100-300 cases.

- Statistical Approach

- Decide what would be the level of indicator that is acceptable. Typically, 80-85% is NCI SEER’s goals.

- Calculate sample size based on power

- This would be based on what is believed to be the true distribution in the population. This can be a limitation; in our experience, this was not very successful as we realized at the end of the study our educated guess on what the prevalence of indicator of interest in the population was incorrect.

Data Quality Collaborators

SEER works with various agencies and associations to further the cause of improving data quality. Cancer PathCHART is an example of an initiative that collaborates with multiple organizations.

- American College of Surgeons (ACS) Commission on Cancer (CoC)

- American Joint Committee on Cancer (AJCC)

- Centers for Disease Control and Prevention (CDC) National Program of Cancer Registries (NPCR)

- College of American Pathologists (CAP)

- International Association of Cancer Registries (IACR)

- International Collaboration on Cancer Reporting (ICCR)

- National Cancer Registrars Association (NCRA)

- North American Association of Central Cancer Registries, Inc. (NAACCR, Inc.)

- SEER Registries

- Statistics Canada